Research

Here are some of the research projects I've worked on. You might also be interested in my Google Scholar profile.Experts Don't Cheat: Learning What You Don't Know By Predicting Pairs [arXiv]

Daniel D. Johnson, Daniel Tarlow, David Duvenaud, Chris J. Maddison

ICML 2024

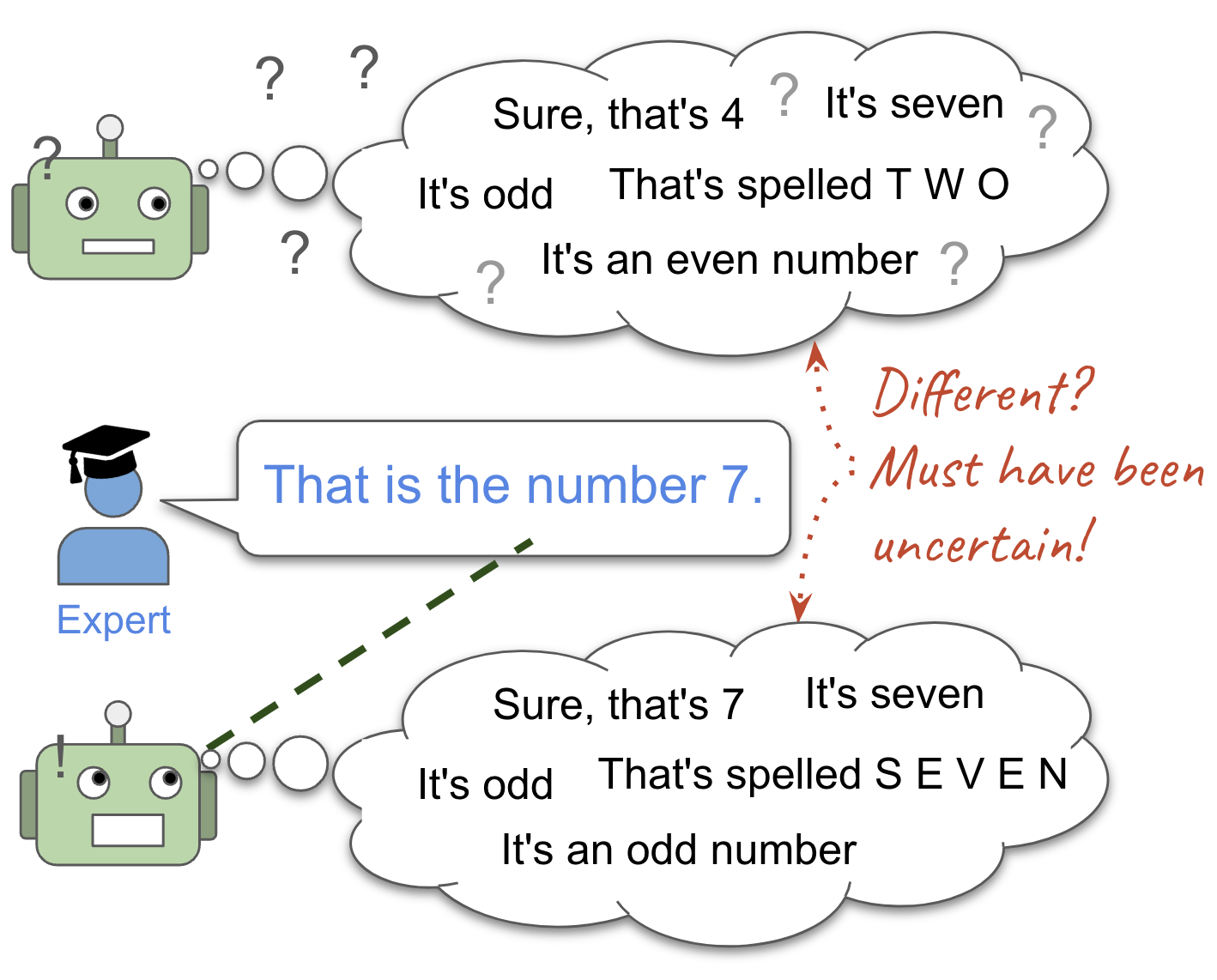

We propose a general strategy for teaching a model to quantify its own epistemic uncertainty: train it to predict pairs of independent responses drawn from the true conditional distribution, allow it to "cheat" by observing one response while predicting the other, then measure how much it cheats. Remarkably, we prove that being good at cheating is equivalent to being second-order calibrated, a principled extension of ordinary calibration that allows us to construct provably-correct frequentist confidence intervals for p(Y|X) and detect incorrect responses with high probability. We demonstrate empirically that our approach accurately estimates how much models don't know across a variety of tasks.

Penzai + Treescope: A Toolkit for Interpreting, Visualizing, and Editing Models As Data [arXiv, Penzai code, Treescope code]

Daniel D. Johnson

ICML Workshop on Mechanistic Interpretability 2024

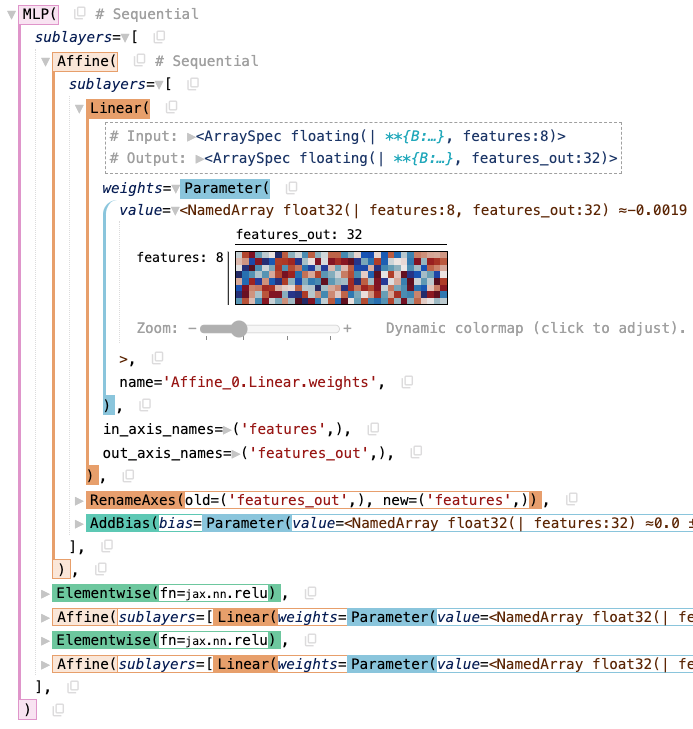

Penzai is a JAX library focused on making it easy to manipulate neural network models after they are trained, by exposing the forward pass using declarative combinators and providing toosl for modifying and analyzing them. Treescope is an interactive pretty-printer and array visualizer that can visualize both model inputs/outputs and the models themselves, which was originally designed with Penzai but now supports both JAX and PyTorch. The overall goal of the libraries is to reduce the barrier of entry for researchers trying to understand pretrained neural networks and steer their behaviors.

Parallel Algebraic Effect Handlers [paper, code]

Ningning Xie*, Daniel D. Johnson*, Dougal Maclaurin, Adam Paszke

International Conference on Functional Programming 2024

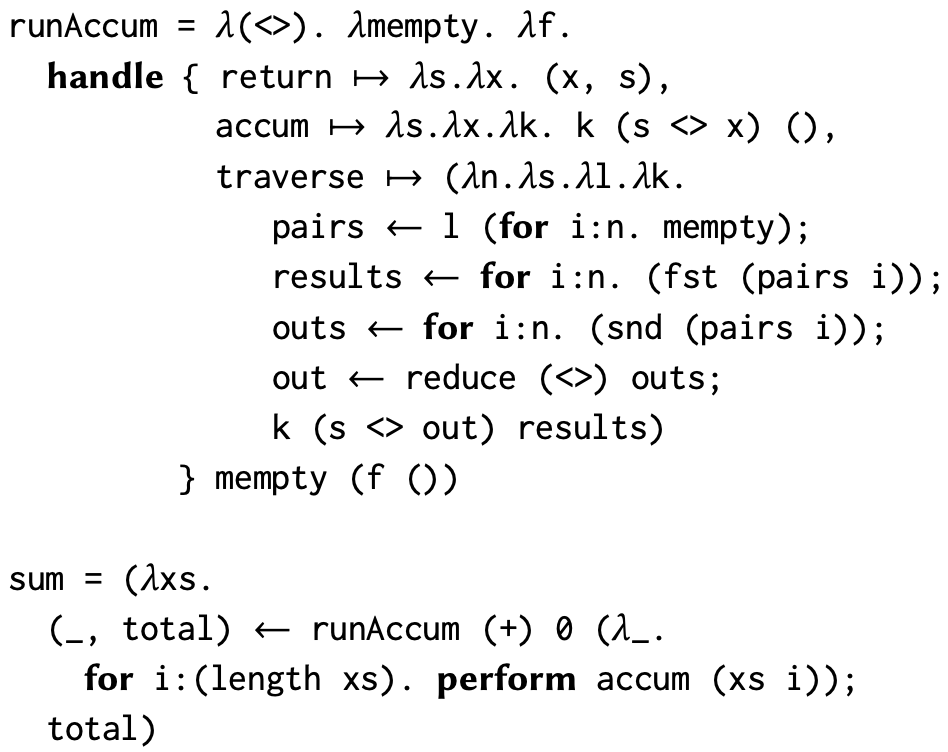

Motivated by the language Dex, we explore an extension of algebraic effects that ensure effects can be executed in parallel. We formalize this in an untyped lambda calculus and also provide a Haskell implementation.

R-U-SURE? Uncertainty-Aware Code Suggestions By Maximizing Utility Across Random User Intents [arXiv, code]

Daniel D. Johnson, Daniel Tarlow, Christian Walder

ICML 2023

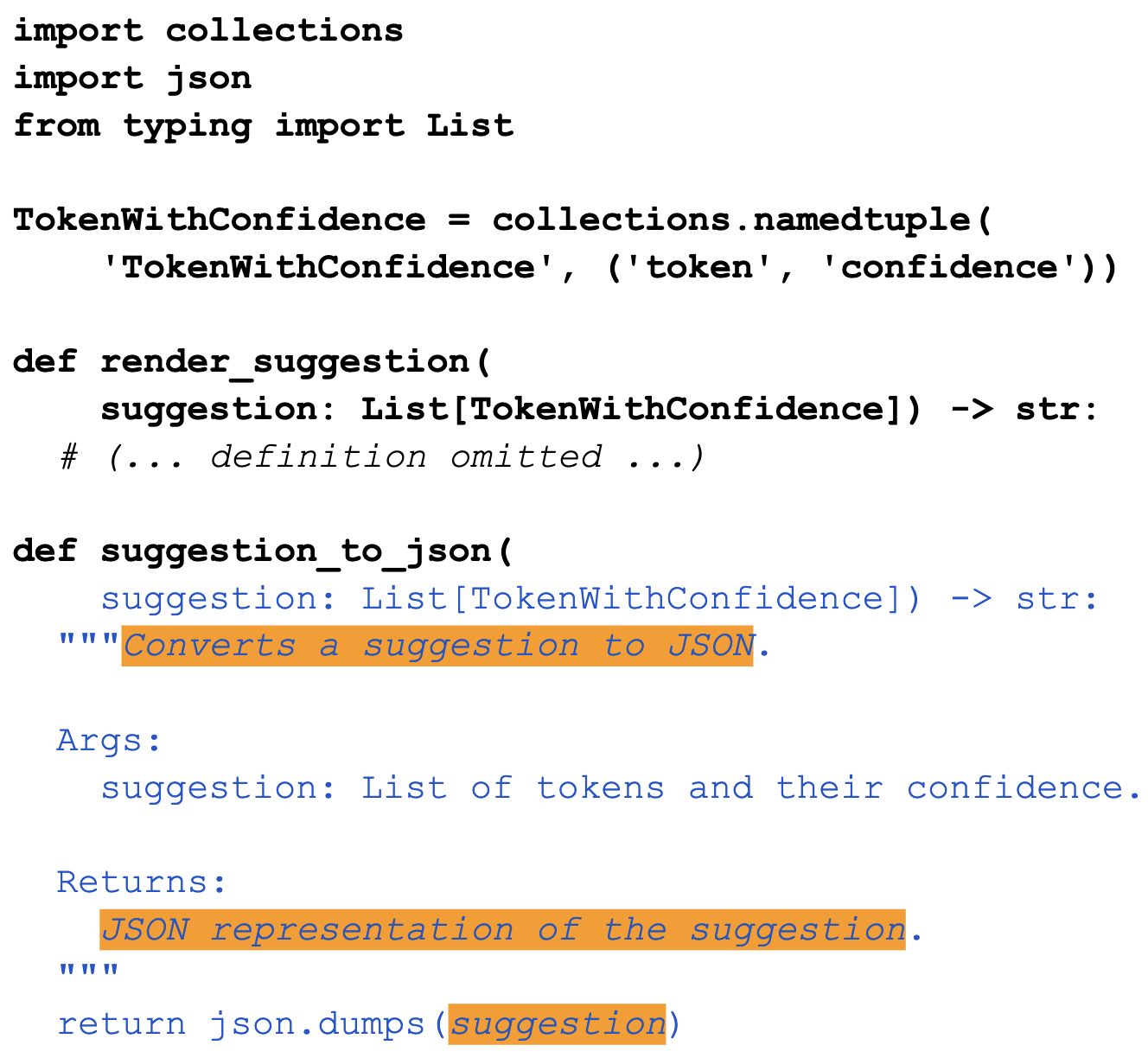

Large language model assistants can be very useful for software development, but often make incorrect guesses that users must go back and fix. We present an approach for building uncertainty-aware suggestions based on a decision-theoretic model of goal-conditioned utility, using random samples from a generative model as a proxy for the unobserved possible intents of the end user.

A Density Estimation Perspective on Learning From Pairwise Human Preferences [arXiv]

Vincent Dumoulin, Daniel D. Johnson, Pablo Samuel Castro, Hugo Larochelle, Yann Dauphin

Transactions on Machine Learning Research (2023)

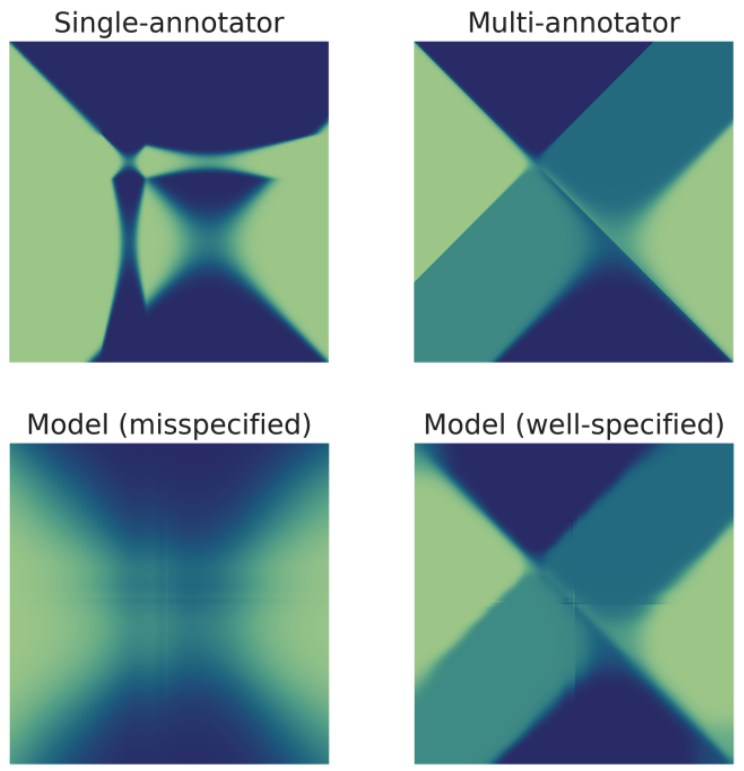

We propose an alternative interpretation of methods for learning from pairwise human preferences, by casting it as a density estimation problem for an implicit preference distribution rather than as a reinforcement learning problem. We also discuss "annotator misspecification", where wrong modeling assumptions about an annotator's preference distribution produces poorly-adapted models.

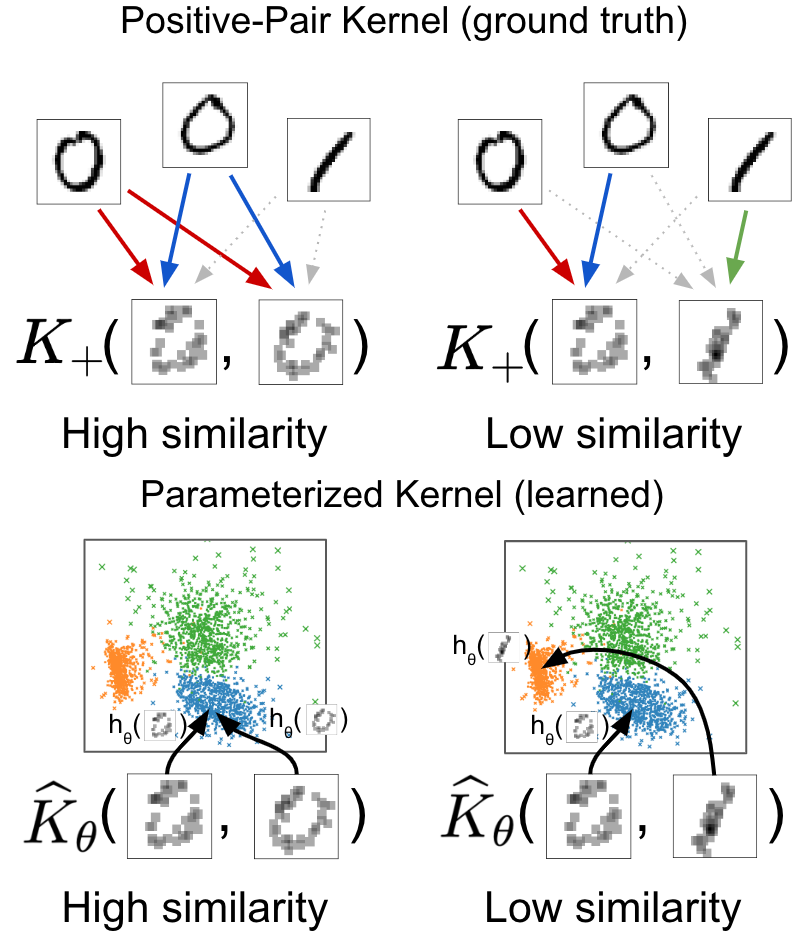

Contrastive Learning Can Find An Optimal Basis For Approximately View-Invariant Functions [arXiv]

Daniel D. Johnson, Ayoub El Hanchi, Chris J. Maddison

ICLR 2023

We reveal a surprising connection between contrastive learning objectives and kernel methods: the optimum of these objectives can be reinterpreted as a positive-definite kernel, and the spectral decomposition of this kernel yields a min-max optimal basis for supervised learning under the assumption that positive pairs have similar labels.

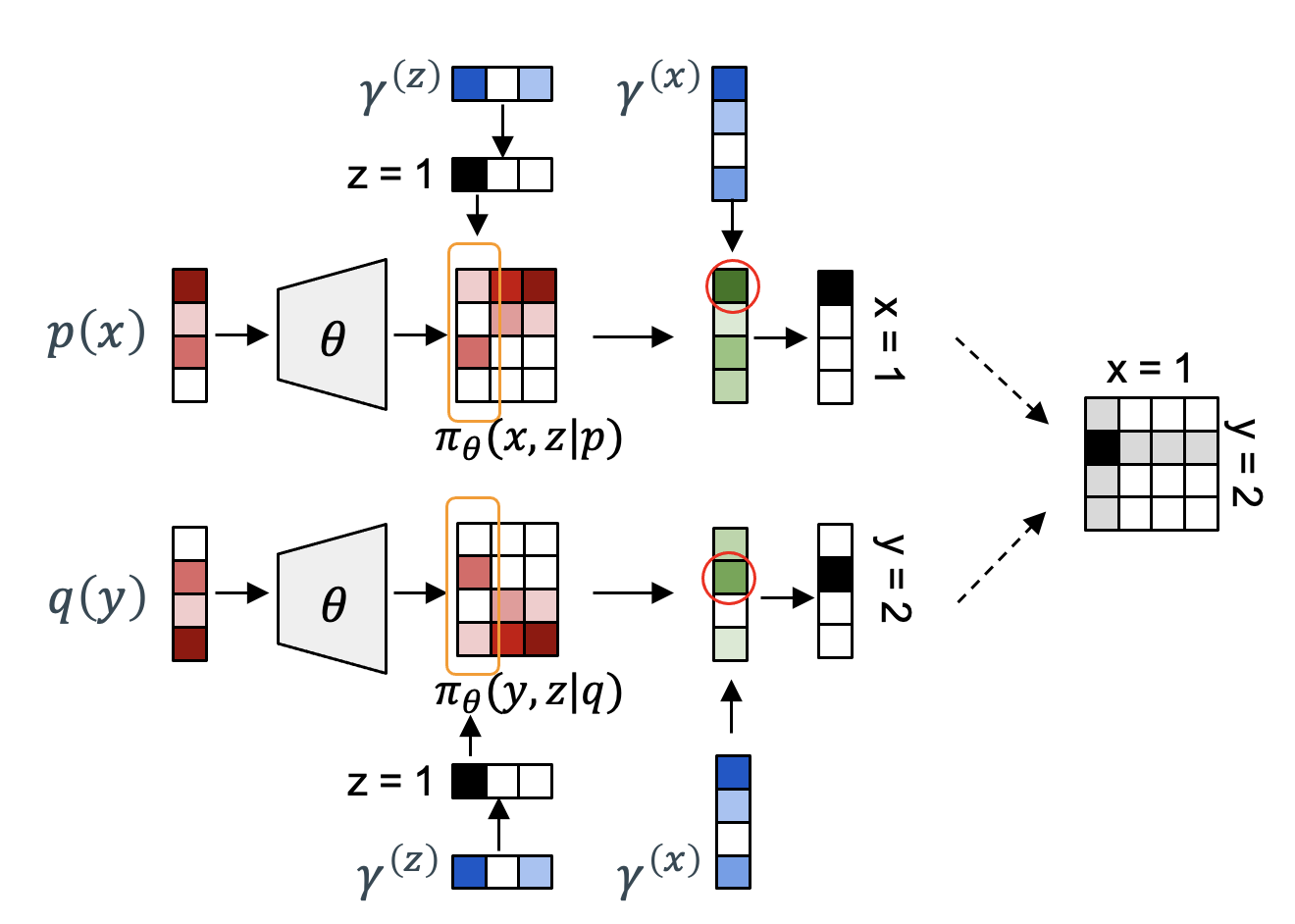

Learning Generalized Gumbel-Max Causal Mechanisms [arXiv, code]

Guy Lorberbom*, Daniel D. Johnson*, Chris J. Maddison, Daniel Tarlow, Tamir Hazan

NeurIPS 2021 (spotlight)

We generalize the Gumbel-max structural causal model to a family of parameterized causal models, which can be trained to minimize quantitative criteria such as the variance of counterfactual effects, and which then generalize to new counterfactual queries at test time.

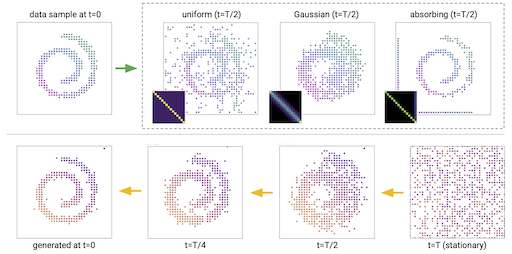

Structured Denoising Diffusion Models In Discrete State-Spaces [arXiv, code]

Jacob Austin*, Daniel D. Johnson*, Jonathan Ho, Daniel Tarlow, Rianne van den Berg

NeurIPS 2021

We introduce Discrete Denoising Diffusion Probabilistic Models (D3PMs), diffusion-like generative models for discrete data that introduce non-uniform structure into the corruption process, including transition matrices that mimic Gaussian kernels, matrices based on nearest neighbors, and matrices that introduce absorbing states. We show strong results with these models on both text and quantized images.

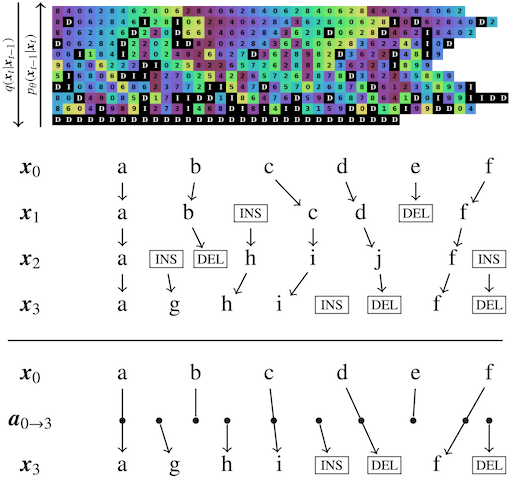

Beyond In-Place Corruption: Insertion And Deletion In Denoising Probabilistic Models [arXiv, code]

Daniel D. Johnson, Jacob Austin, Rianne van den Berg, Daniel Tarlow

2021 ICML Workshop on Invertible Neural Networks, Normalizing Flows, and Explicit Likelihood Models

We consider a class of discrete corruption processes and denoising models for sequence data that can insert and delete elements while still being efficient to train and sample from. We demonstrate that these models outperform standard in-place diffusion-like models on an arithmetic sequence task, and that when trained on the text8 dataset they can be used to fix spelling errors without any fine-tuning.

Dex programming language [code]

Multiple contributors

A research language for typed, functional array processing. I've made various contributions, including implementing extensible record and variant types, improving type inference and typeclass synthesis, and rewriting the pretty-printer. I also helped write the ICFP paper "Getting to the Point. Index Sets and Parallelism-Preserving Autodiff for Pointful Array Programming" (arXiv preprint).

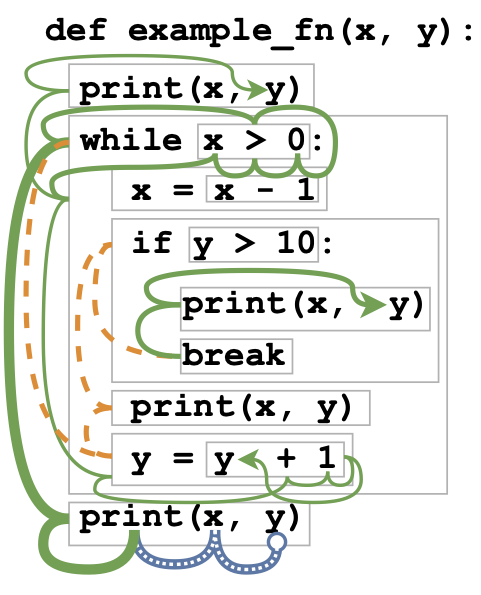

Learning Graph Structure With a Finite-State Automaton Layer [arXiv, talk, code]

Daniel D. Johnson, Hugo Larochelle, Daniel Tarlow

NeurIPS 2020 (spotlight presentation); also presented at GRL+ 2020

We propose a differentiable layer that learns to add new edges to a graph based on a continuous relaxation of the accepting paths of a finite-state automaton. This layer can reproduce static analyses of Python programs and, when combined with a larger graph-based model and trained end to end, improves performance on the variable misuse program understanding task.

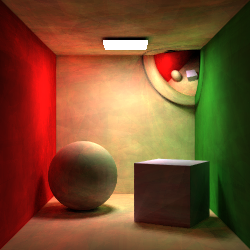

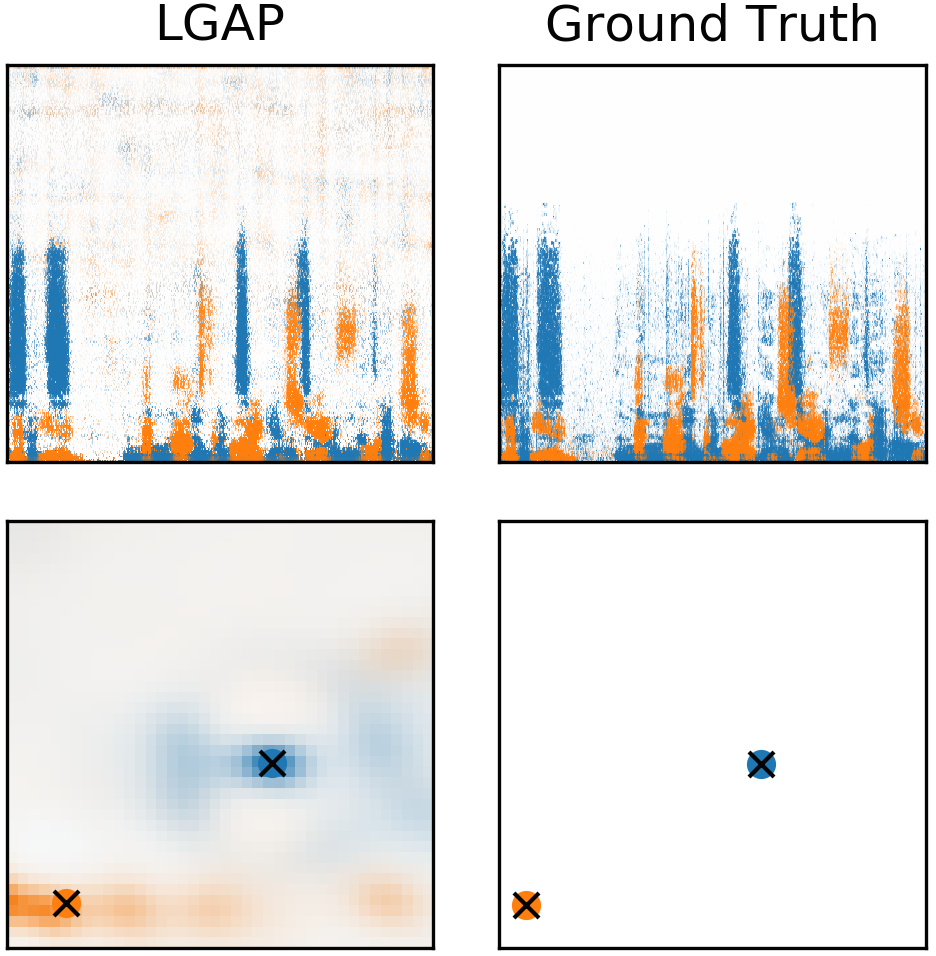

Latent Gaussian Activity Propagation: Using Smoothness And Structure to Separate And Localize Sounds in Large Noisy Environments [pdf, poster]

Daniel D. Johnson, Daniel Gorelik, Ross E. Mawhorter, Kyle Suver, Weiqing Gu, Steven Xing, Cody Gabriel, Peter Sankhagowit

NeurIPS 2018

We present an approach for simultaneously separating and localizing multiple sound sources recorded by multiple microphones using MAP inference and automatic differentiation. We start with a smoothness prior over the temporal, frequential, and spatial characteristics of the source sounds and use gradient ascent to find the most likely separation.

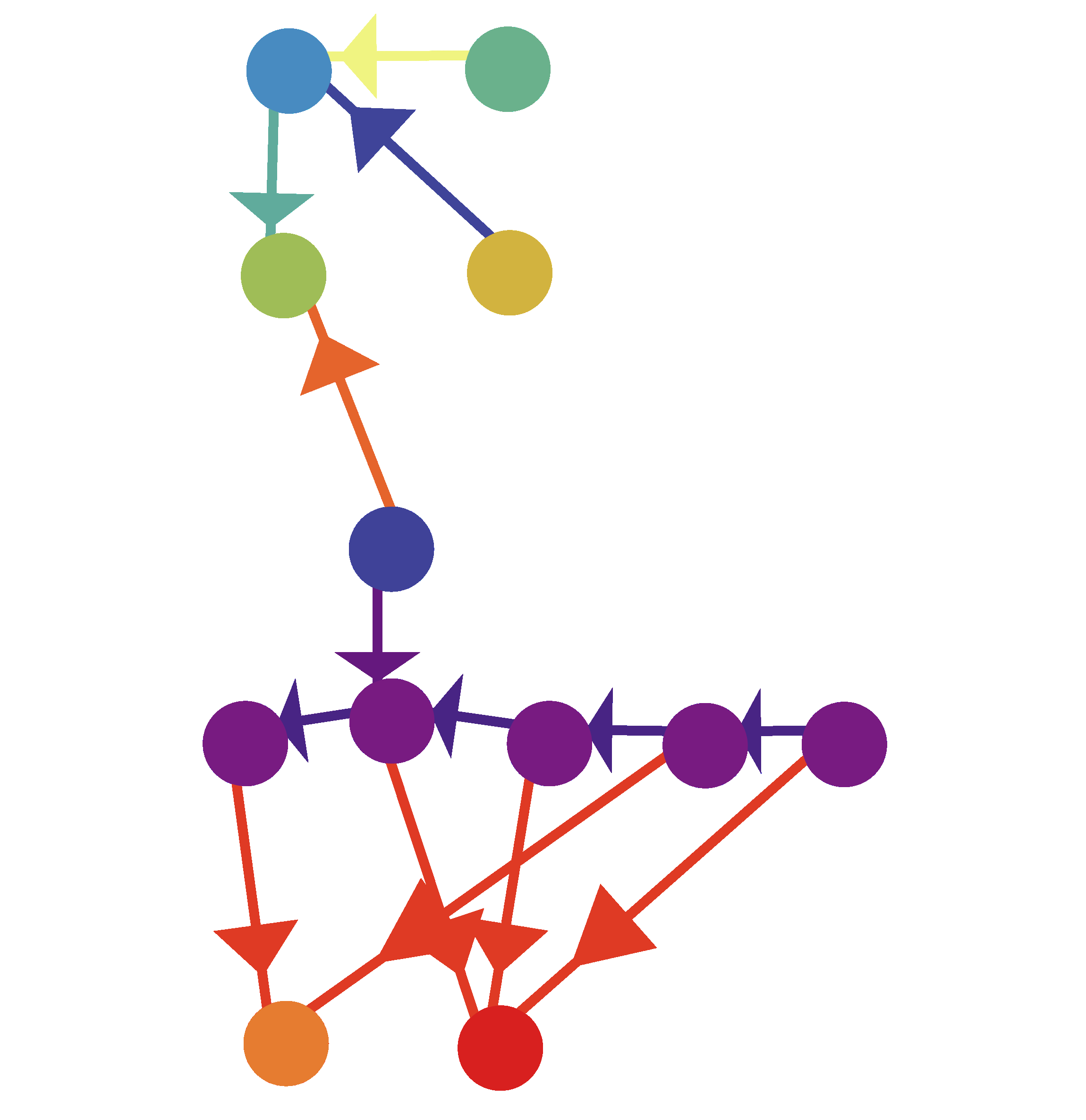

Learning Graphical State Transitions [pdf, talk, code, blogpost]

Daniel D. Johnson

ICLR 2017 (oral presentation)

This work introduces the GGT-NN, a model that uses a graph as an intermediate representation and learns to modify those graphs in response to textual input. The model is able to build knowledge graphs for most of the bAbI tasks and can also simulate the operation of simple Turing machines.

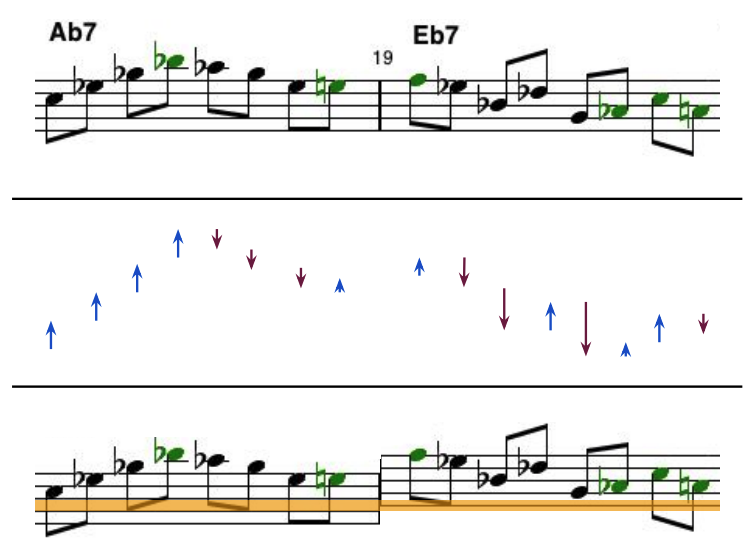

Learning to Create Jazz Melodies Using a Product of Experts [pdf, blogpost]

Daniel D. Johnson, Robert M. Keller, Nicholas Weintraut

International Conference on Computational Creativity 2017

We describe a neural network architecture that learns to generate jazz melodies over chord progressions. The predictions are generated by combining information from an "interval expert" that follows the melody contour and a "chord expert" that scores notes based on their relative position from the root note in the active chord.

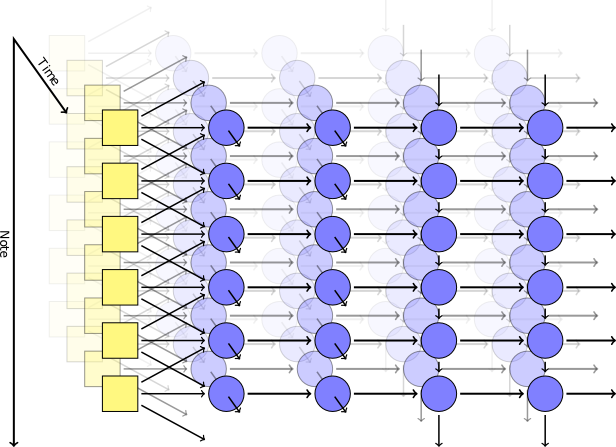

Generating Polyphonic Music With Tied-Parallel Networks [pdf, code, blogpost]

Daniel D. Johnson

EvoMusArt 2017

This work describes an architecture for generating polyphonic piano-roll music by using two arrays of LSTMs along perpendicular axes: time-axis LSTMs pass states forward in time, and note-axis LSTMs pass states upward across notes. The work started as a blog post, and was later published as a conference paper.